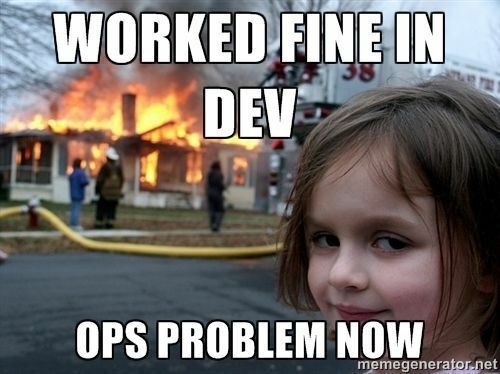

Just encountered another textbook case of environment-specific behavior making its way to production. You know the scenario - clean test runs, green pipelines, and a deployment that somehow manages to uncover every possible edge case we didn't account for.

The fascinating part isn't even the divergence between environments, but rather the almost ritualistic "works on my machine" declaration that follows. It's particularly interesting how this phrase has survived decades of technological evolution, from bare metal to containers to orchestrated clusters.

Some observations from today's incident:

- Local environment: Pristine, controlled, predictable

- Production environment: Meeting real-world chaos with unexpected grace

- Monitoring dashboards: Telling stories that local logs never hinted at

- Incident timeline: Growing longer as we discover more dependencies

- Postmortem doc: Already filling up with "lessons learned"

For those keeping score at home, this is precisely why we've been advocating for:

- Infrastructure as Code

- Parity between environments

- Comprehensive integration testing

- Observable systems

- Well-defined incident response procedures

- Blameless postmortems

The real value often emerges during incident reviews - those moments when we collectively realize how our systems actually behave versus how we think they behave. There's nothing quite like a production incident to highlight the gaps in our understanding.

Curious to hear about your experiences with environment-specific behaviors and how you've addressed them. What patterns have you found most effective in minimizing the dev-prod gap, and how do you handle the inevitable incidents when they occur?