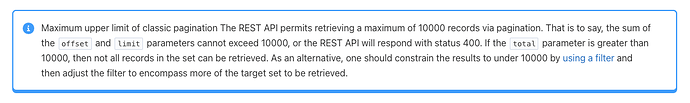

I am trying to extract data from pagerduty api using python script. I have written script to get list of all the incidents from a given specified date range from the available team data stored in json and using that data to display results of incidents from api https://api.pagerduty.com/analytics/raw/incidents/{incident_id}’. I am able to retrieve data for 1, 2 teams but when I add 500 + teams the results is around 10000 rows. I am not sure how to retrieve this in a faster way. It took me 2 hours and then script keeps on running. I am not sure how to bypass this pagerduty api limit , I have added pagination, I have used offset, limit. There is no straightforward guide on this. Please help

How to extract incidents with 10000 results from pagerduty api

Login to PagerDuty Commons

No account yet? Create an account

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.